Curtail ReGrade

One Solution for Testing and Troubleshooting Across Dev, QA, and Operations

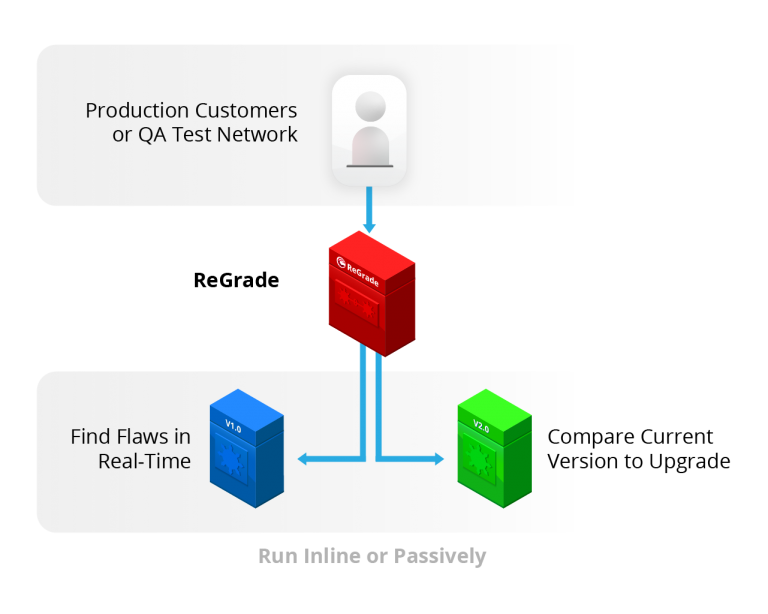

Curtail’s patented ReGrade detects, records, and reports any anomalous application behavior among different versions of software in real time. Without requiring any scripted tests to be written or developed, this novel technology provides DevOps, SREs (Site Reliability Engineers), security, software and operations teams with an automated tool for evaluating release candidate behavior against real, production traffic before going live.

Today

Fix after launch

Write discrete tests with limited coverage

Security added later

Manually locate source of problems

After-the-fact incomplete log analyis

Curtail's Approach

Preview and fix before going live

See differences between versions without having to know what you are looking for

Detect zero-day vulnerabilities with no additional effort

Automatically locate precise bug origination and use playback to remediate

Continuously monitor real data and network services behavior

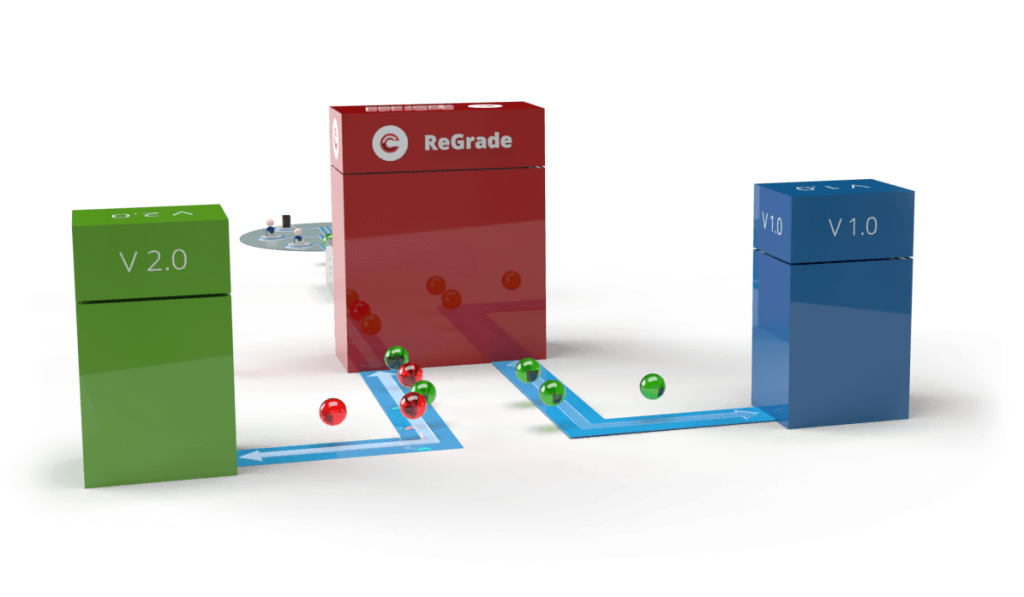

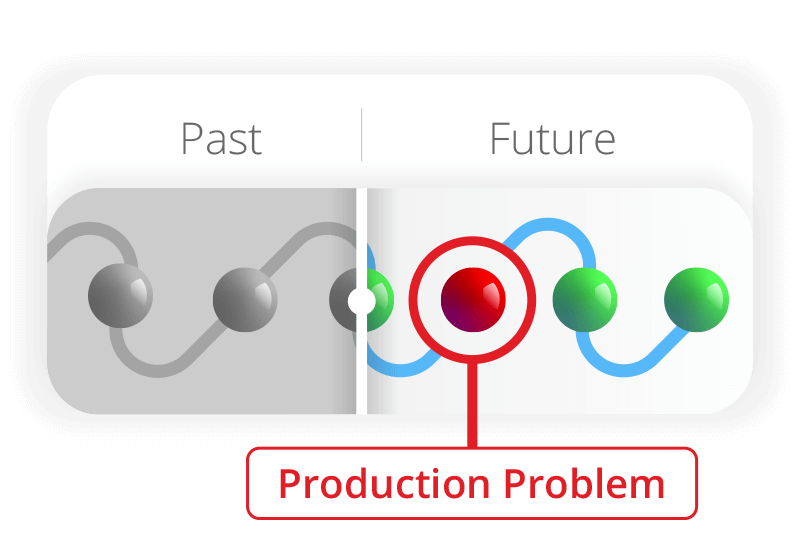

Simplify Complex Testing

Complex software systems can exhibit unexpected and unwanted behavioral changes when code is updated. Curtail ReGrade compares how a new version of software behaves using live user data to determine where flaws are and how to fix them before releasing. It does so by running the same inputs through the production version and the new software candidate. Testing with ReGrade’s intelligent comparison engine does not disrupt the end user experience. Behind the scenes, ReGrade documents any discrepancy in the network traffic and allows developers to replay the inputs and resolve the problems. QA no longer needs to guess where the problem is. So, finding and resolving defects is much easier

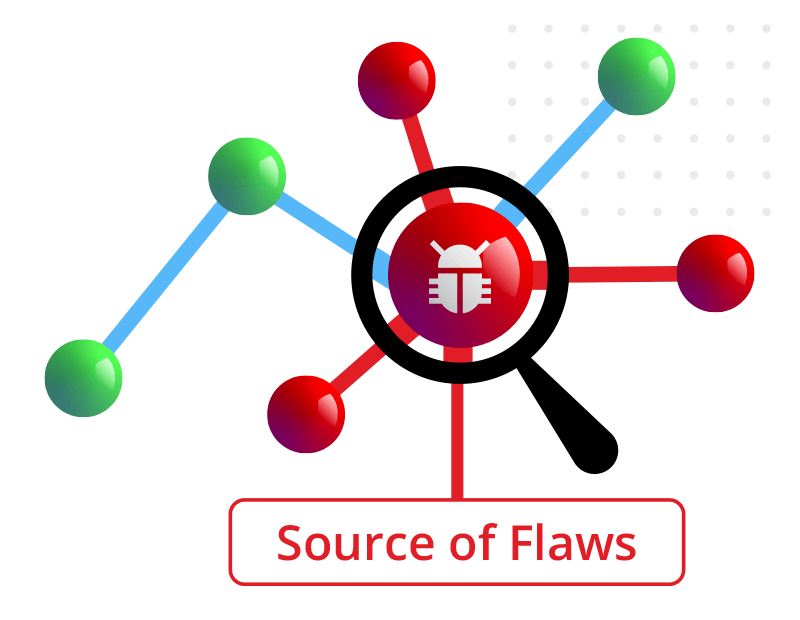

Pinpoint Software Behavior Changes With Our Network Traffic Comparison

ReGrade gives developers pinpoint information about what behavior in a release candidate causes a discrepancy. ReGrade can identify the exact behavioral changes that occurred and provide detailed information about the interactions with the network service that led to the unexpected result.

The comparison result is a unique and different piece of data that tracks side-by-side performance and the network response, mapping and tracing the various ID’s to find any differences that could indicate a software bug or security anomaly.

Given identical inputs, a new software’s outputs should only differ to the extent that release requirements introduced changes. Comparing these outputs allows the discovery of unexpected changes or regressions introduced by the new version.

Every network service is a software package with network inputs and outputs (behaviors). Any change in user experience caused by changes to the software must manifest as a difference in these network behaviors. Comparing behavior between software versions allows discovery of changes to user experience that may not be reflected in the log data. If there is no logging to provide observability into the changed behavior, then no amount of analysis or AI can discover these user-impacting changes.

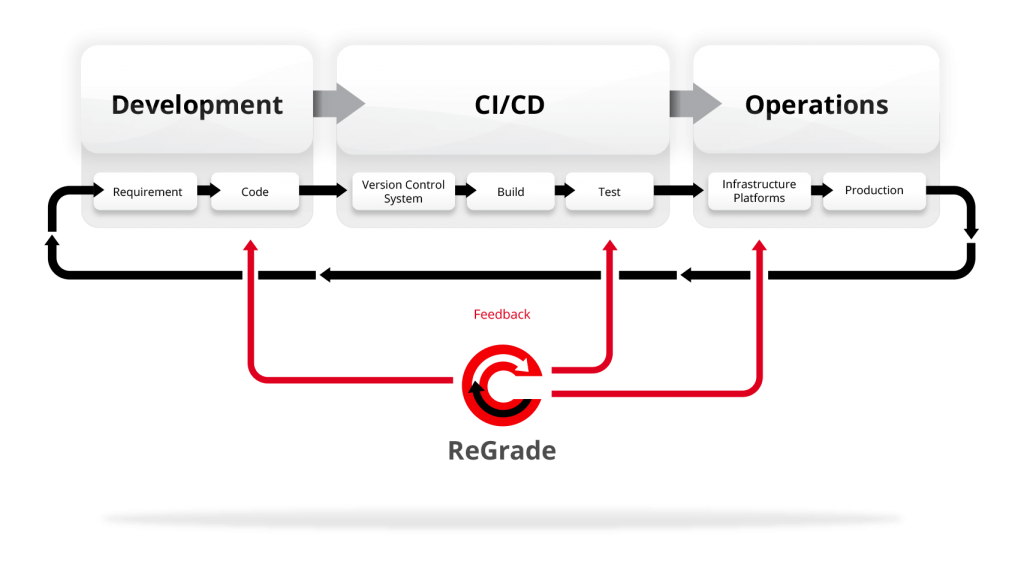

Test Early. Test Always.

ReGrade becomes a key test at multiple points within the CI/CD Pipeline

ReGrade can be deployed in various scenarios

Next Generation Canary (Passive Mode)

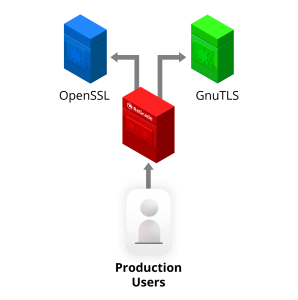

Open Source Alternatives

Compare open source alternatives for capability and performance before release.

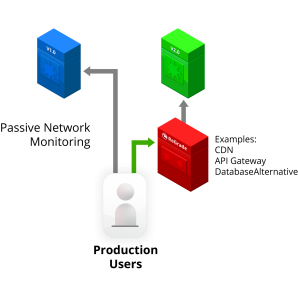

Infrastructure and Cloud Migrations

Validate your new environment doesn’t break your existing software.

Replay

Debug production failures quickly with immediate feedback and actionable data.

Flexible Deployments and Powerful Response Capabilities

Getting started with ReGrade is easy. It can be configured to run on-prem or in cloud-based or virtual environments.

For any scenario, there are three primary implementation modes:

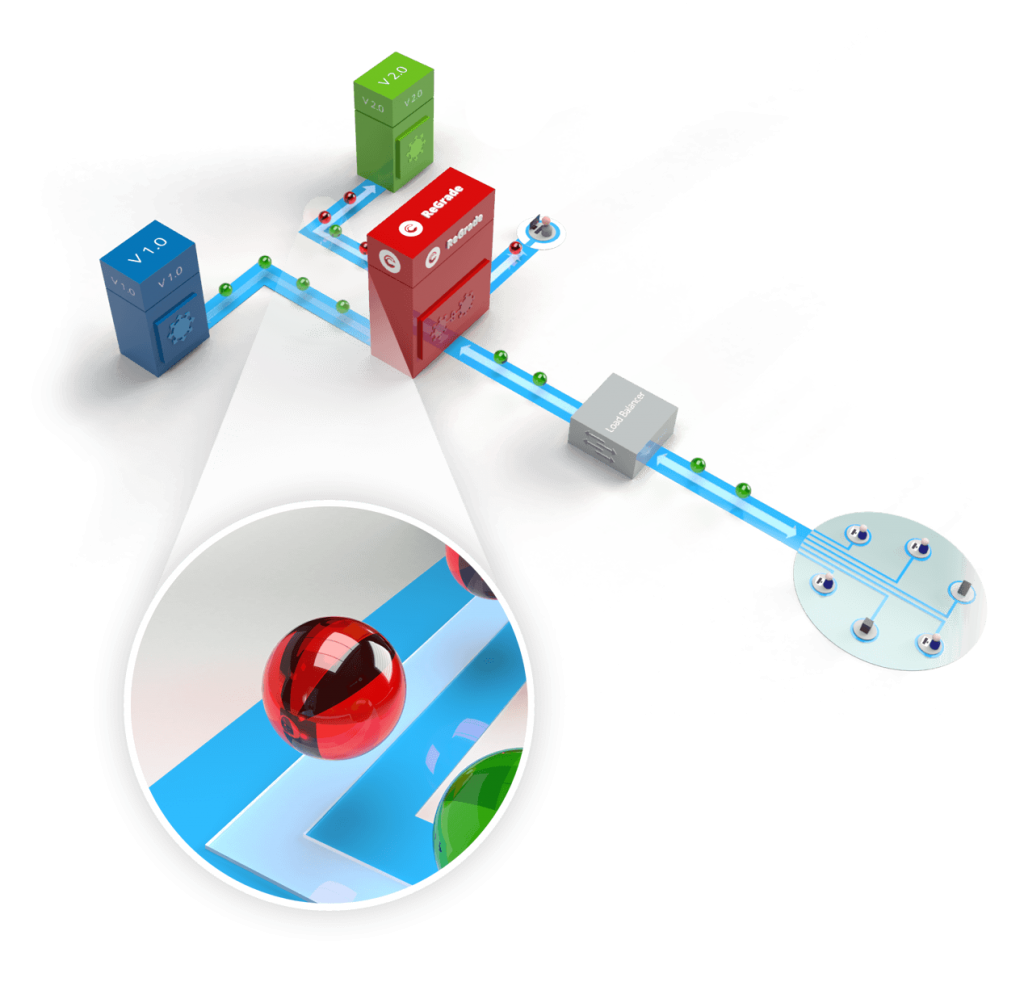

1) Replay of packet capture data

2) Inline monitoring

3) Passive monitoring

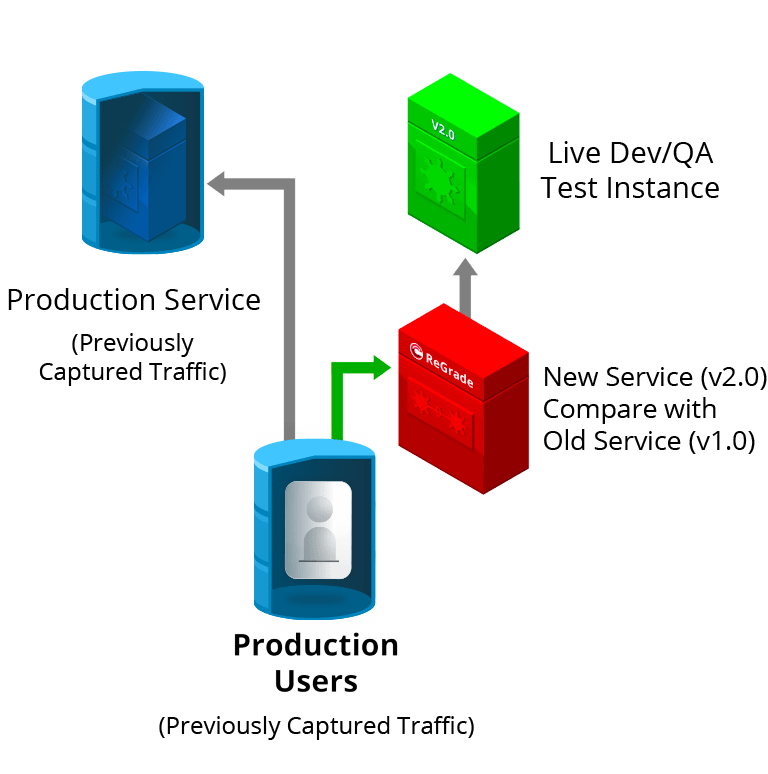

1) Replay of Packet Capture Data

Replay of Packet Capture Data can help developers find bugs early: This is the simplest way to get your development and QA staff going with ReGrade. Simply perform a network packet capture of the test traffic of interest against your trusted software instance, including real customer data. Then, replay that captured traffic to the release candidate using ReGrade. Any differences may indicate problems with the release candidate as well as identifying known changes that have been introduced intentionally as part of the new software upgrade. For development organizations that are shifting left, ReGrade is a perfect solution.

2) Inline Monitoring

Side-by-side comparison with inline ReGrade monitoring is well-suited for quality assurance cases: You can run the trusted version and the release candidate side-by-side and have the ReGrade engine process the comparisons actively inline. ReGrade takes care of any duplication of data. If monitoring real customer data, customers see only the responses from the production system. The information can then be used to identify any necessary changes in the code or to automatically perform the upgrade if the ReGrade comparison testing does not indicate any unexpected differences. This might be a deployment option for developers and QA personnel (right up to release) who can set up and look at side-by-side versions inline.

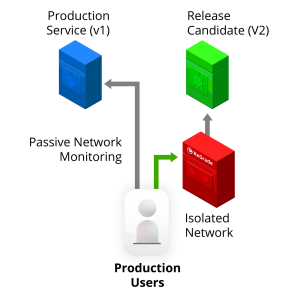

3) Passive Monitoring

ReGrade monitoring is best suited for production operations environments. If monitoring real customer data, customers see only the responses from the production system. You can run the trusted version and the release candidate side-by-side and have the ReGrade engine process the comparisons passively. ReGrade takes care of any duplication of data. The comparison and analysis can be used to identify problems in the code or move forward with the upgrade. For DevOps production professionals aspiring to true CI/CD implementations, this is the preferred deployment model as the development and quality assurance testing can be performed in real time with or without production traffic and can perform the upgrade automatically if the tests check out. The active comparison can also continue after the upgrade has happened to continue monitoring for any changes or differences that may show up in production (the shift right testing approach).

Limitations of Current Testing

Today’s software testing is by nature incomplete. Whether manual or automated, discrete tests often don’t reflect real user experience.

Monitoring is looking in a rearview mirror, while ReGrade looks at software with headlights illuminating the road hazards in front

The Limitations of Current Testing

There are many ways to look at your software quality, security, and performance. Most software quality or inspection software looks for known flaws (vulnerability scans) or uses known static or dynamic testing capabilities that are intended to replicate normal production traffic but often do not. Other approaches like RASP require the inspection software to be run on the software to be tested to look for code errors. These techniques all find a certain class of bugs and flaws but do not show how your software behaves against another working version and how it would perform against normal customer traffic. There are also various techniques like canary and blue/green testing which require coordination with operations and QA. In these cases, a small group of users is switched on to a new version of software. But this only allows for some of the traffic to be sampled, then the organization is actually taking a risk that a subset of users will be negatively impacted.

Curtail’s patented, systematic testing approach provides developers and QA actionable information on where software has changed. See the problems ahead of time before it’s too late.

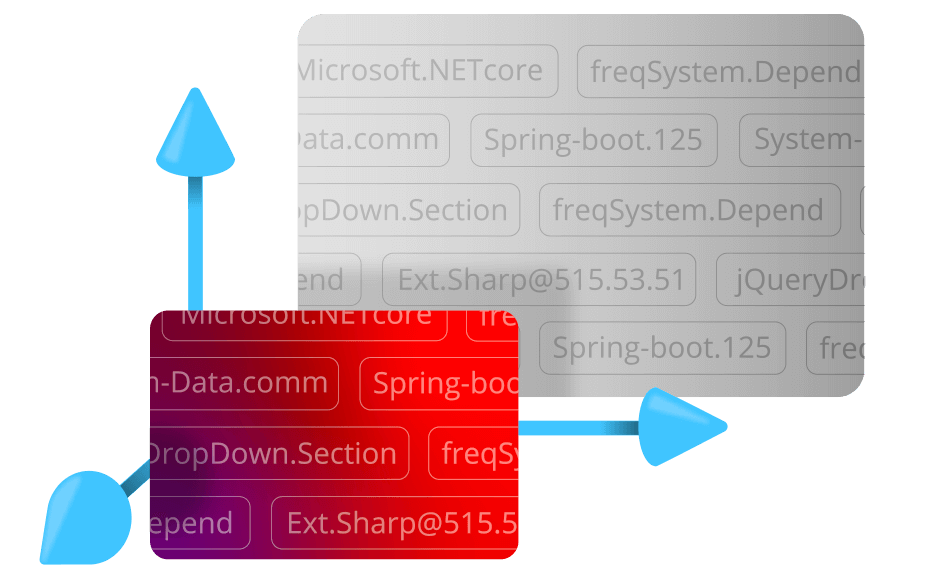

Discrete Testing and Logs

Discrete testing is how most current unit testing and integration testing is done. The tests are a set of pre-defined inputs that are fed to the software under test. The resulting output from each fixed input is then evaluated against a finite set of pre-defined criteria. If all of the defined criteria are met, then the test passes. These test criteria may or may not include evaluation of log content generated by the input. In any case, there are two major ways that software flaws are released despite discrete testing. First, the set of inputs that are generated by the tests are often not representative of what users in production really do. User interactions that are not represented in the set of test inputs may trigger bugs that are not detected until after production deployment. Further, inputs that are present in the tests may trigger bugs that are still not detected because the test criteria perform only a limited set of checks. Thus, the test may pass because the defined criteria were met (e.g. the reply contained the expected information and was returned within the allowable time window) without detecting unexpected behavioral changes (e.g. a regression that includes extra information that was supposed to be kept secret). But the problem is that these (often automated) tests are looking only for specific issues – they can’t detect everything that could possibly go wrong or observe the behavior of the software. And that means that bugs and problems in the code can be pushed live without the required testing – resulting in network downtime, lost revenue and frustrated end-users.

Logs are by definition after the fact, and don’t tell you everything. Often problems are not represented in log data. Testing producing only logs is insufficient when diagnosing application behavior for hidden regressions and detecting zero-day software vulnerabilities. The key is to look at how your software will behave relative to prior versions when presented with a variety of traffic inputs including live data.

Other methods of detecting bugs or security vulnerabilities after the fact also fall short of desired results. Bug bounty programs rely on groups of hackers finding vulnerabilities in commercial public-facing software. But again, even if the bugs or vulnerabilities are found, the bugs are found after release. And the hackers may not necessarily play fair when they do find a bug or flaw.

Our Customers...

...leverage a unique way to observe unexpected and expected software changes

...pinpoint the source of flaws with precision using one patented behavioral comparison approach

...have a new source of primary data that doesn’t rely on logs

...don't risk bad user experiences, not even to 1% of users.

How ReGrade Works

Curtail’s patented ReGrade detects, records, and reports any anomalous application behavior among different versions of software in real time. Without requiring any scripted tests to be written or developed, this new technology provides DevOps and release teams with an automated tool for evaluating release candidate behavior against real, production traffic before going live.

Request a Demo of ReGrade

Fields marked with an asterisk (*) are required.